A Reduced-Precision Network for Image Reconstruction

Manu Mathew Thomas, Karthik Vaidyanathan, Gabor Liktor, and Angus G. Forbes

ACM Trans. Graph (Proc. SIGGRAPH Asia), 39(6):231, 2020.

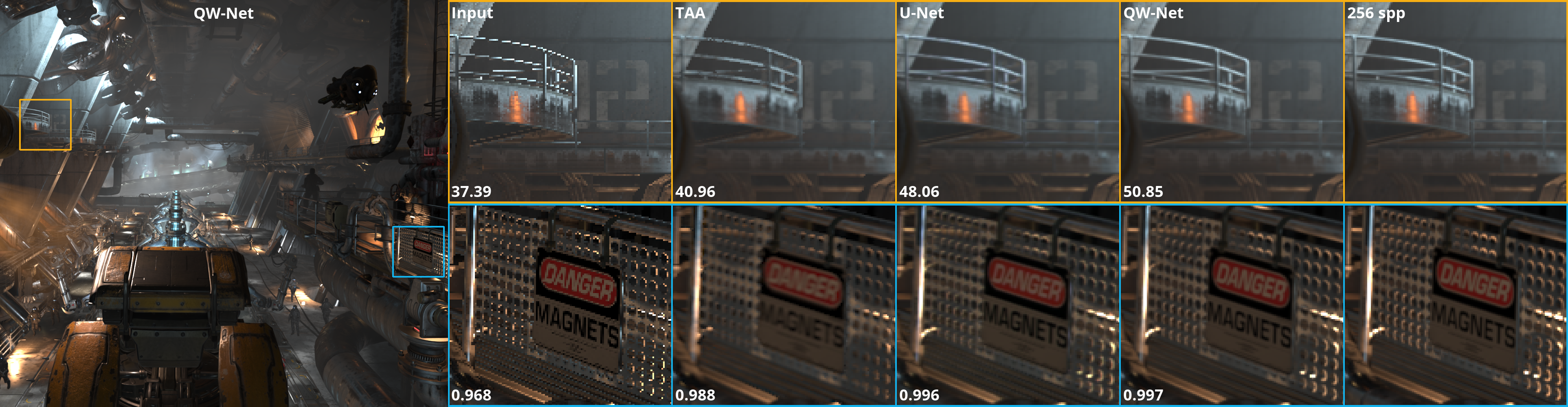

Neural networks are often quantized to use reduced-precision arithmetic, as it greatly improves their storage and computational costs. This approach is commonly used in applications like image classification and natural language processing, however, using a quantized network for the reconstruction of HDR images can lead to a significant loss in image quality. In this paper, we introduce QW-Net, a neural network for image reconstruction, where close to 95% of the computations can be implemented with 4-bit integers. This is achieved using a combination of two U-shaped networks that are specialized for different tasks, a feature extraction network based on the U-Net architecture, coupled to a filtering network that reconstructs the output image. The feature extraction network has more computational complexity but is more resilient to quantization errors. The filtering network, on the other hand, has significantly fewer computations but requires higher precision. Our network uses renderer-generated motion vectors to recurrently warp and accumulate previous frames, producing temporally stable results with significantly better quality than TAA, a widely used technique in current games.

Manu Mathew Thomas, UC Santa CruzKarthik Vaidyanathan, Intel Corporation

Gabor Liktor, Intel Corporation

Angus Forbes, UC Santa Cruz

Downloads

Paper (Author's version - PDF)Supplementary Materials (ZIP)

Code (ZIP)

Data (Coming soon!)

Cite

@article{10.1145/3414685.3417786title = {A Reduced-Precision Network for Image Reconstruction},

author = {Manu Mathew Thomas and Karthik Vaidyanathan and Gabor Liktor and Angus G. Forbes},

journal = {ACM Trans. Graph.},

volume = {39},

number = {6},

articleno = {231},

numpages = {12},

year = {2020},

doi = {10.1145/3355089.3356565}

}