Resonant Waves is an art-science project that reveals and celebrates how sounds

can form complex symmetrical shapes and patterns.

This multisensory artwork generates and processes wave interference patterns that are

translated into dynamic geometries across different modalities. Participants simultaneously hear,

feel and see the patterns created when a carefully selected range of sound frequencies generate

motion in water. While sitting inside the installation—which consists of a projector,

an armchair with attached bass transducers, colored ring lights and a camera aimed at a dish

of vibrating water—participants see visual images of the motions projected onto a screen and

feel vibrations that are amplified to create a somatic representation of the sounds

that resonate throughout participants’ bodies. By adjusting various sonic parameters,

participants interactively explore the connection between sound and shape and are encouraged to

observe how these immersive patterns affect them physically, mentally and emotionally.

Resonant Waves was created by Richard Grillotti and Andy DeLallo and first shown

at the Digital Arts and New Media 2019 MFA thesis exhibition. A paper describing the project was presented

by Richard Grillotti

at SIGGRAPH’20. More information

about the project can be found at resonantwaves.org.

Machine learning algorithms are becoming increasingly prevalent in our environment. Embedded

in products and services we use on a daily basis, they rely on our personal information, searching for

patterns in it and producing corresponding outcomes in return. Mostly we are unaware of this

process, and we do not know how these systems “see” us.

The Classification Cube art installation invites viewers to interact with an ML classification

system. Inside

a seemingly private space within the context of an art gallery, viewers are confronted with two screens.

One presents a prerecorded video of a diverse group of animated figures representing realistic yet unusual

combinations of body features and movements. On the other screen, viewers see a video feed of their own

bodies given by a live webcam that is situated inside the space. Images on both screens are subjected to

analysis by an ML classification system whose outcomes rapidly update and are displayed on the screens.

This analysis includes a face detection process and estimations of age, gender, emotion and action of the

subjected bodies. The immediate capture and analysis of viewers’ bodies, along with the comparison to

other bodies inside the space, turns the installation into a platform for exploration. Classifcation Cube was created by Avtial Meshi and first shown

at the Digital Arts and New Media 2019 MFA thesis exhibition. A paper describing the project was presented

by Avital Meshi

at SIGGRAPH’20. More information

about the project can be

found here.

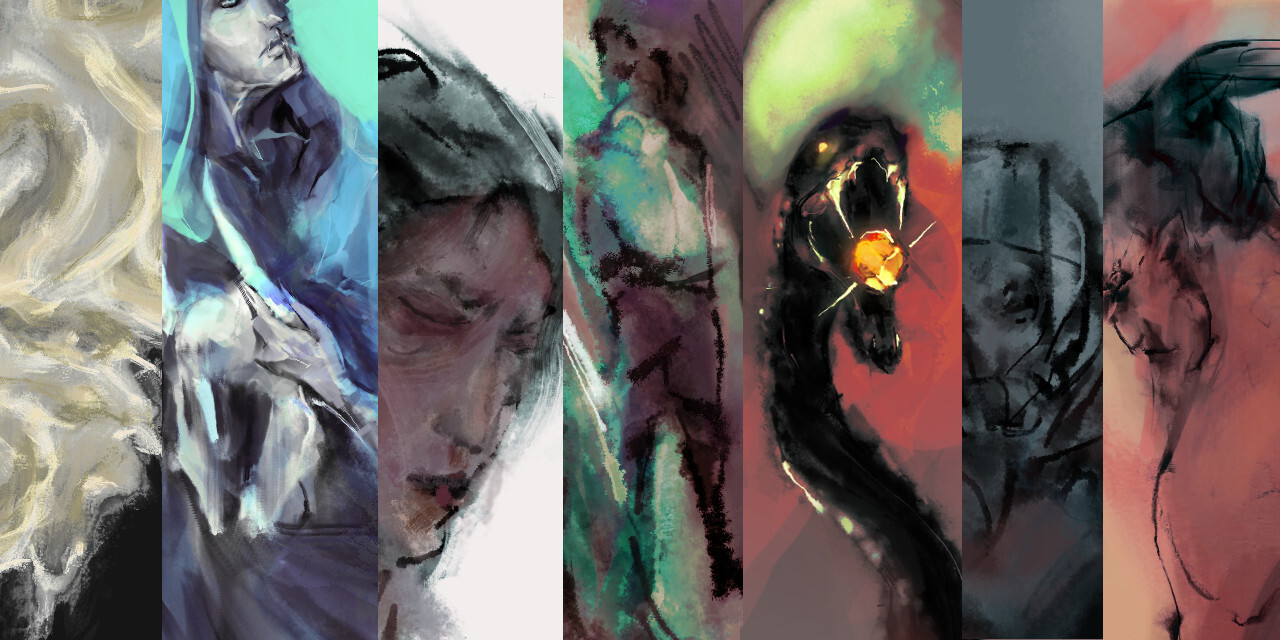

Narrative media may vary the adjacency of fixed textual passages to drive rhizomatic readings through a montage procedure. exul mater is a hypertext fiction that locates perlocutionary acts in virtual spaces and resonant gaps, providing a reflection on sculptural fiction, the (de)formance of complex systems, and tarot reading as methods of layering metaphorical blends into polysemous juxtapositional elements. exul mater is a computerized oracle deck, questioning cybernetic power and identity, which is performed by a querent who arranges illustrated cards to reveal narrative texts. Using combinatorial narrative, it probes the multiple faces of each figure in its encoded myth (heightened by tropes of science fantasy and magical corruption), whose contradictions and diffractions reveal their conflicted interiority. This deck argues against clinging to powerful stories for too long, and in favor of messy stories which fail to privilege any one reading, which can disrupt the imbalanced synthesis of history. exul mater was created by Jasmine Otto, and was featured in the (un)continuity exhibition at the Electronic Literature Organization (ELO) Conference and Media Arts Festival in July 2020.

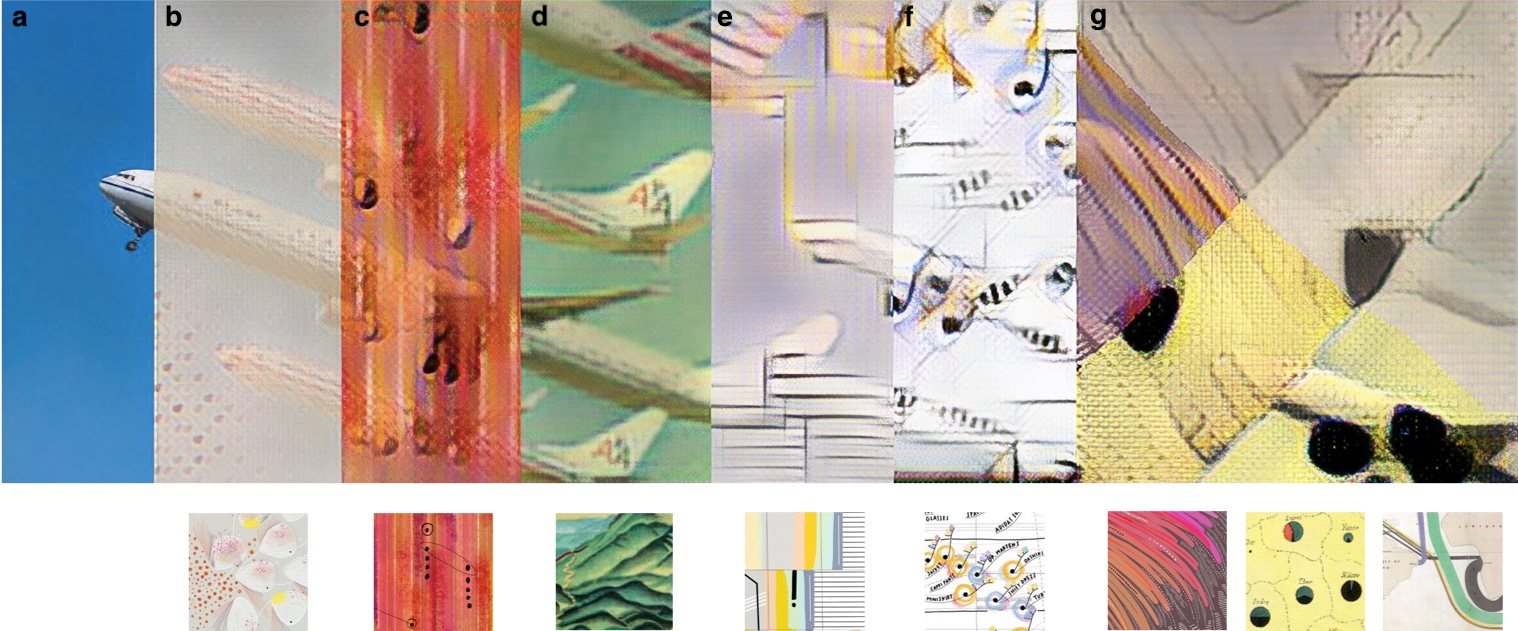

Data Brushes is an interactive web application that explores neural style transfer using models trained on data visualizations and data art. In addition to enabling a novel creative workflow, the process of interactively modifying an image via multiple style transfer networks reveals meaningful features encoded within the networks, and provides insight into the effects particular networks have on different images, or different regions within a single image. The application includes two distinct modes that invite casual creators to engage with deep convolutional neural networks to co-create custom artworks. The first mode, ‘magic markers’, mimics painting with a brush on a canvas, enabling users to paint a style onto selected areas of an image. The second mode, ‘compositing stamps’, uses a real-time method for applying style filters to selected portions of an image. Data Brushes was developed by Mahika Dubey, Jasmine Otto, and Angus Forbes, and was presented by Mahika Dubey at the IEEE VIS Arts Program in October 2019.

Imaginando Macondo is a public artwork that commemorates Nobel Prize-winning author Gabriel García Márquez.

It was first showcased at the Bogota International Book Fair in April 2015 to an audience of more than 300,000 over the course of two weeks. The project, developed by George Legrady, Andres Burbano, and Angus Forbes, involved extensive collaboration between an international team of artists, designers, and programmers, including Paul Murray and Lorenzo Di Tucci of UIC. Viewers participate by submitting and classifying a photograph of their choice via a kiosk or their mobile phone. The classification is based on literary themes that occur in García Márquez’ work; and user-submitted photos appear alongside images produced by well-known Colombian photographers. An article describing the project was published in IEEE Computer Graphics & Applications in 2015.

The electromagnetic spectrum is a vast expanse of varied energies that have been with us since the origin of the universe. Despite the great range of energies in the electromagnetic spectrum, our human senses can only detect a very limited portion, a portion we call visible light. It has been only in recent history that we have created technologies that enable us to harness light and other energies. Even though we may not be able to see these other energies with the naked eye, we have employed them in our communications. Radio is one such technology and the technological lens by which we “see” radio is a radio receiver. The RF project investigates creating pictures of the overall radio activity in a particular physical locale. Using an N6841A wideband RF sensor donated by Keysight Technologies, Brett Balogh, Anıl Çamcı, Paul Murray, and Angus Forbes devised a series of projects that explore our “lived electromagnetism”, including an interactive sonification of electromagnetic activity, real-time information visualization applications, and a VR experience where common RF signals were identified in various social scenarios. These projects were shown at the opening night of VISAP’15, and presented the following day in an artist talk by Brett Balogh.

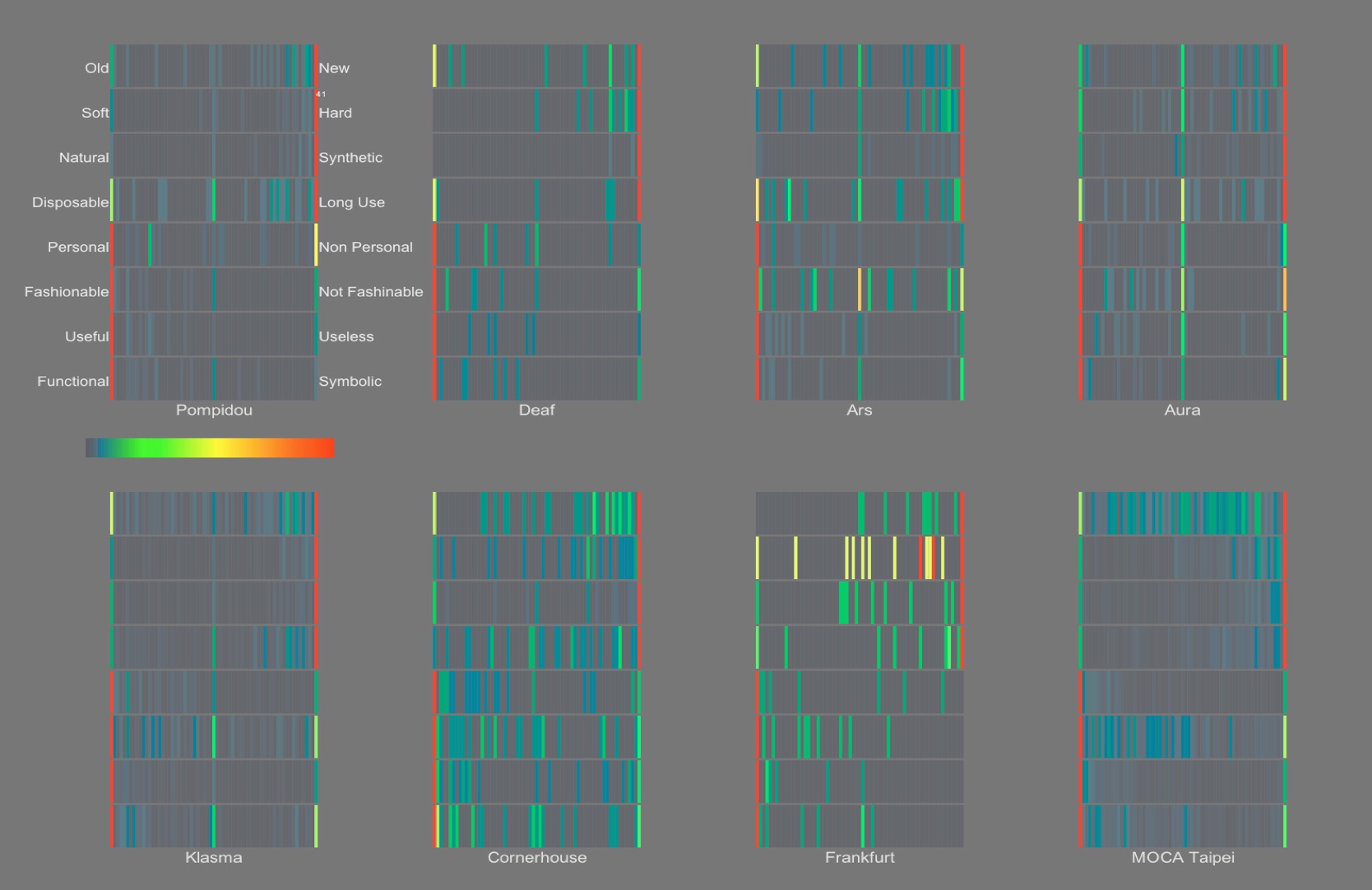

Works by media artists tend to be evaluated in terms of either cultural or pragmatic utility. The

function of the media arts is often described by highlighting the societal contribution of creating

products of cultural enrichment, introducing tools for promoting innovation or providing the

means by which to think critically about the ethical ramifications of technology. The media arts

also are seen as having the potential ability to aid in the solving of specific scientific and

engineering problems, especially those having to do with creative representations of, interacting

with, and reasoning about data. Many media artists also characterize their own work as critical

refection on technology, embracing technology while questioning the implications of its use.

Articulating these multifaceted tensions between artistic outlooks and technical engagement in

interdisciplinary art-science projects can be complex—What is the role of the artist in research

collaborations? Many artists have wrestled with this question, but there is no clear

methodological approach to conducting media arts research in these contexts. Articles presented at VISAP’14, ArtsIT’14, SIGGRAPH’15, and recently accepted to Leonardo, investigate the role of the media artist in art-science contexts; articles written collaborations with George Legrady explore a range of issues related to presenting data visualization in public arts venues.

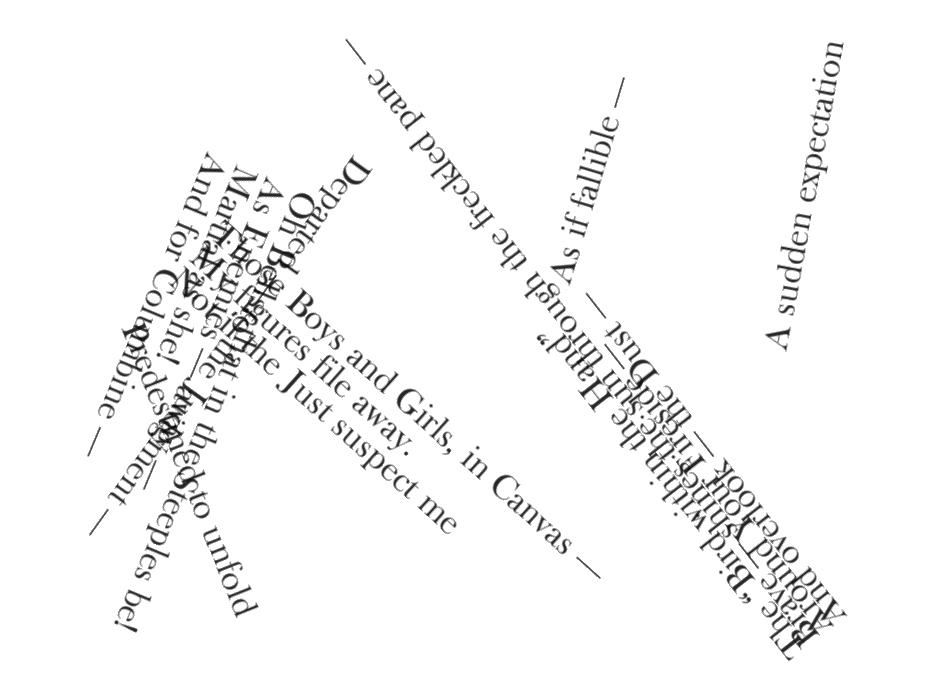

Poetry Chains is a series of animated text visualizations of the poetry of Emily Dickinson, first showcased in the Hybridity and Synesthesia exhibition at Lydgalleriet, as part of the Electronic Literature Organization Festival in Bergen, Norway in 2015.

The project is inspired by Lisa Samuels’ and Jerome McGann’s reading of a seemingly whimsical fragment found in a letter written by Dickinson: “Did you ever read one of her Poems backward, because the plunge from the front overturned you?” They investigate what might it mean to interpret this question literally, asking how a reader could “release or expose the poem’s possibilities of meaning” in order to explore the ways in which language is “an interactive medium.” Poetry Chains provides a continuous, dynamic remapping of Dickinson’s poems by treating her entire corpus as a single poem. A depth-first search is used to create collocation pathways between two words within the corpus, performing a non-linear “hopscotch” (with a poetic rather than narrative destabilization). A version of the animations (with no interaction) is available online, developed by Angus Forbes and Paul Murray.

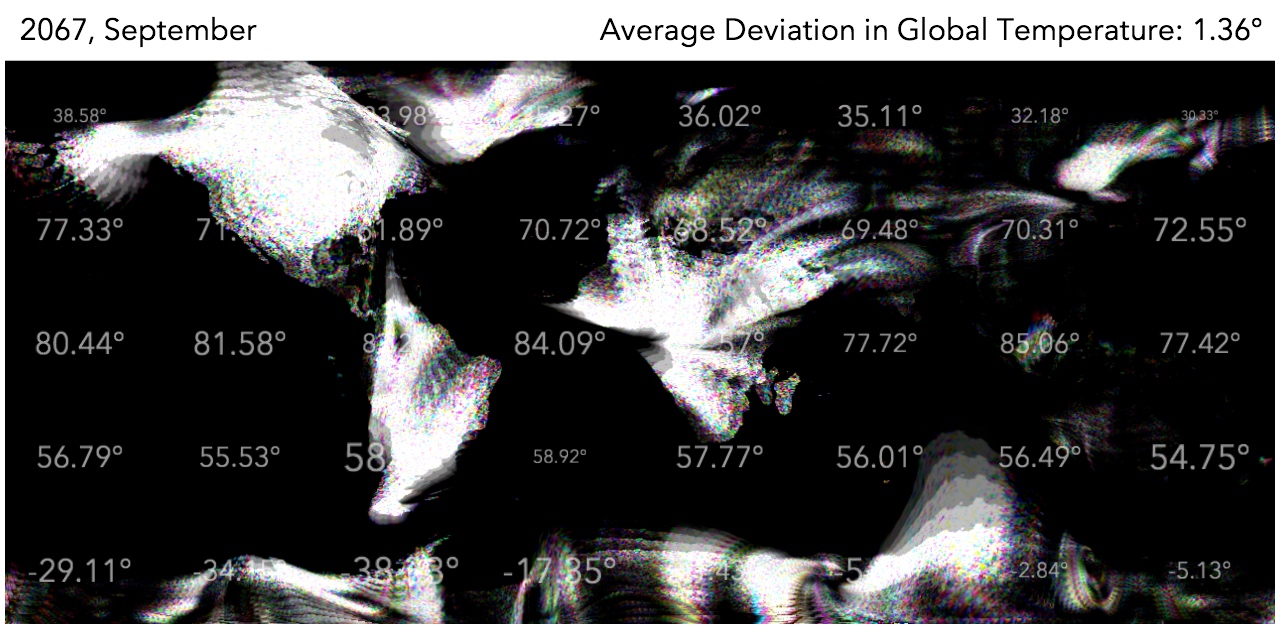

Turbulent world is a time-based artwork that displays an animated atlas that changes in response to the increased deviation in world temperature over the next century. The changes are represented by visual eddies, vortices, and quakes that distort the original map. Additionally, the projected temperatures are themselves shown across the world, increasing or decreasing in size to indicate the severity of the change. The data used in the artwork was generated by a sophisticated climate model that predicts the monthly variation in surface air temperature across different regions of the world through the end of the century. A write-up of the project was presented at ISEA’15 in Vancouver, British Columbia.

The Fluid Automata system is comprised of an interactive fluid simulation and vector visualization technique that can be incorporated in media arts projects. These techniques have been adapted for various configurations, including mobile applications, interactive 2D and 3D projections, and multi-touch tables, and have been presented in a number of different environments, including galleries, conferences, and a virtual reality research lab, including: Science City at Tucson Festival of Books (2013); Center for NanoScience Institute in Santa Barbara (2012); IEEE VisWeek Art Show in Providence, Rhode Island, curated by Bruce Campbell and Daniel Keefe (2011); and Questionable Utility at University of California, Santa Barbara, organized by Xárene Eskandar (2011). The techincal details of the Fluid Automata system are described in a paper presented at Computational Aesthetics in 2013; an expanded version of the paper, including a discussion of the history of artworks making use of cellular automata concepts, was published as a chapter in the 2014 Springer volume, Cellular Automata in Image Processing and Geometry, edited by Paul Rosin, Adam Adamatzky, and Xianfang Sun.

Additional creative works can be found

here.