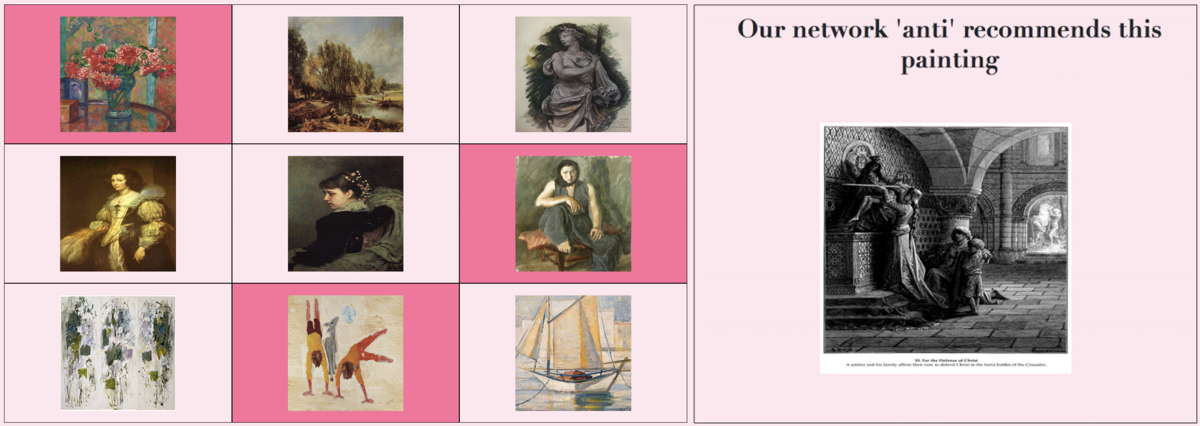

Art I Don’t Like is a web-based interactive art experience that provides personalized content to users and emphasizes the introduction of disparate content. We suggest a new “anti-recommender” system that provides content that is aesthetically related in terms of low-level features but challenges the implied conceptual frameworks indicated by initial user selections. Furthermore, we demonstrate an application of recommender technologies to visual art in an effort to expose users to a broad range of art genres. We present details of a prototype implementation trained on a subset of the WikiArt dataset, consisting of 52,000 images of art from 14th- to 20th-century European painters, along with feedback from users. Art I Don’t Like is on the web at http://www.artidontlike.com. A paper describing the project by Sarah Frost, Manu Mathew Thomas, and Angus Forbes was accepted to Museums and the Web, presented by Sarah Frost in April 2019.

This project, a collaborative investigation by Esteban Garcia Bravo, Andres Burbano, Vetria Byrd, and Angus Forbes, examines the history of the influential Interactive Image computer graphics showcase, which took place at museum and conference venues from 1987 to 1988. It explores the historical contexts that led to the creation of this exhibition by the Electronic Visualization Lab (EVL), which included the integrated efforts of both artists and computer scientists. In addition to providing historical details about this event, the authors introduce a media archaeology approach for examining the cultural and technological contexts in which this event is situated. The project was presented at SIGGRAPH’17 and published in Leonardo, the journal of the International Society for the Arts, Sciences and Technology.

Biostomp is a new musical interface that relies on the use mechanomyography (MMG) as a biocontrol mechanism in live performance situations. Designed in the form of a stomp box, Biostomp translates a performer’s muscle movements into control signals. A custom MMG sensor captures the acoustic output of muscle tissue oscillations resulting from contractions. An analog circuit amplifies and filters these signals, and a micro-controller translates the processed signals into pulses. These pulses are used to activate a step-per-motor mechanism, which is designed to be mounted on parameter knobs on effects pedals. The primary goal in designing Biostomp is to offer a robust, inexpensive, and easy-to-operate platform for integrating biological signals into both traditional and contemporary music performance practices without requiring an intermediary computer software.

Biostomp was developed by Çağrı Erdem with Anıl Çamcı and presented at NIME’17.

The Chicago 00 Riverwalk AR experience provides a novel way for users to explore historical photographs sourced from museum archives. As users walk along the Chicago River, they are instructed to use their smartphone or tablet to view these photographs alongside he current views of the city. By matching location and view orientation the Riverwalk application creates an illusion of “then and now” co-extant. This superimposition of the historical photographer’s view and the user view is the basis of educational engagement for the user and a key factor in curating the images and the narrative surrounding them, facilitating a meaningful museum experience in a public, outdoor context. The first episode of the Riverwalk AR experience focuses on a single block between N. LaSalle and Clark Streets; the site of the Eastland Disaster in 1915. The site was selected because of the importance of this historical event— the sinking of the Eastland cruise ship 100 years ago was the largest single loss of life in Chicago’s history— and because of the abundant media available in the archive including extensive photographic documentation, newspapers and film reels. The Riverwalk project is led by Geoffrey Alan Rhodes in collaboration with the Chicago History Museum; Marco Cavallo developed technology to facilitate the creation of pulic outdoor AR experiences.

Psionic is a javascript framework for generating visual music. A wide range of motions can be defined using the system, which enables the creation of animation sequences of particles generated from a series of input images. Psionic was first developed for a performance of a composition by Christopher Jette at Riverside Hall in Iowa City in 2016 featuring Courtney Miller on oboe and images by printmaker Terry Conrad. Source code can be found at the Creative Coding Research Group code repository.

Anıl Çamci’s Distractions brings invisible and inaudible signals into the kinetic domain. By picking up the electromagnetic waves in the exhibition space, it visualizes the signals communicating with the mobile devices that are brought into this space by the visitors. Such signals, which would go unnoticed by human perception, represent some of the most prevalent sources of distraction in our everyday lives. The work comments not only on the artist’s process, which is inherently plagued with such distractions, but also on the relationship between modern audiences and exhibition spaces. Relying exclusively on digital computing techniques, such as depth imaging, signal processing, audio synthesis, and numeric milling, Distractions visualizes data, without using computer displays, through infrasound vibrations that activate a point cloud of the artist’s head. This work was first presented at the Art.CHI Inter/Action exhibition as part of ACM CHI in May 2016.

Node Kara is an audiovisual mixed reality installation created by Anıl Çamcı. It offers a body-based interaction using a 3D imaging

of the exhibition space. Node and kara are two words in Japanese that indicate causality.

While node is used to describe natural cause-and-effect relationships,

kara is used when a causality is interpreted subjectively. Although

a clear causal relationship between actions and reactions is a

staple of interaction design, the user’s subjective experience of such

relationships often trumps the designer’s predictions. By adopting

blurring both as a theme and a technique, the piece obfuscates

the causal link between an interactive artwork and its audience. The

deblurring of the audiovisual scene becomes an attracting force that

invites the viewer to unravel the underlying clarity of Node Kara.

This comes, however, at the cost of losing a broader perspective of

the work as the viewer needs to come closer to both see and hear

the work in greater detail. This work was first presented at the IEEE VR Workshop on Mixed Reality Art in March 2016.

DigitalQuest facilitates the creation of mixed reality

applications, providing application designers with the ability to integrate custom virtual content within the real world. It supports

the creation of futuristic “scavenger hunts”, where multiple users

search for virtual objects positioned in the real world and where

each object is related to a riddle or a challenge to be solved. Each

player can compete with the other participants by finding virtual objects

and solving puzzles, thereby unlocking additional challenges.

Virtual objects are represented by animated 3D meshes locked to

a determined position in the real world. The objects are activated

when a player gets within a proximity threshold and then taps the

object on the screen of his or her mobile phone. In our demonstration

application, configurable virtual content appears, followed by a question that must be answered in order to be pass to the next

challenge. The displayed content may consist of images, video

and audio streams, graphical effects, or a text message that provides

hints on how to advance in the game. The editor makes it

easy to create puzzles that can be solved by exploring the surroundings

of the virtual object in order to discover clues and making use

of location-specific knowledge. When a participant figures out the

correct solution, he or she scores points related to the complexity

of the challenge and also unlocks remaining puzzles that cause new

virtual object to appear in the world. At the end of the event, the

player with more points wins. DigitalQuest was first presented at the IEEE VR Workshop on Mixed Reality Art in March 2016.

Monad is a networked multimedia instrument for electronic music performance. Users interact with virtual objects in a 3D graphical environment to control sound synthesis. The visual objects in Monad expand upon the idea of optical discs with the addition of interactivity, real-time synthesis parameters, and three dimensional motions. Every virtual object in a Monad performance is accessible by all partici- pants rather than being assigned to individual performers. The objects therefore act less like personal music instruments and more like shared components of a musical collaboration. Monad was developed by Cem Çakmak with Anıl Çamcı and first presented at the CHI 2016 Workshop on Music and HCI. An expanded version of the paper focused on the use of virtual environments as collaborative music spaces was published in the proceedings of NIME’16.

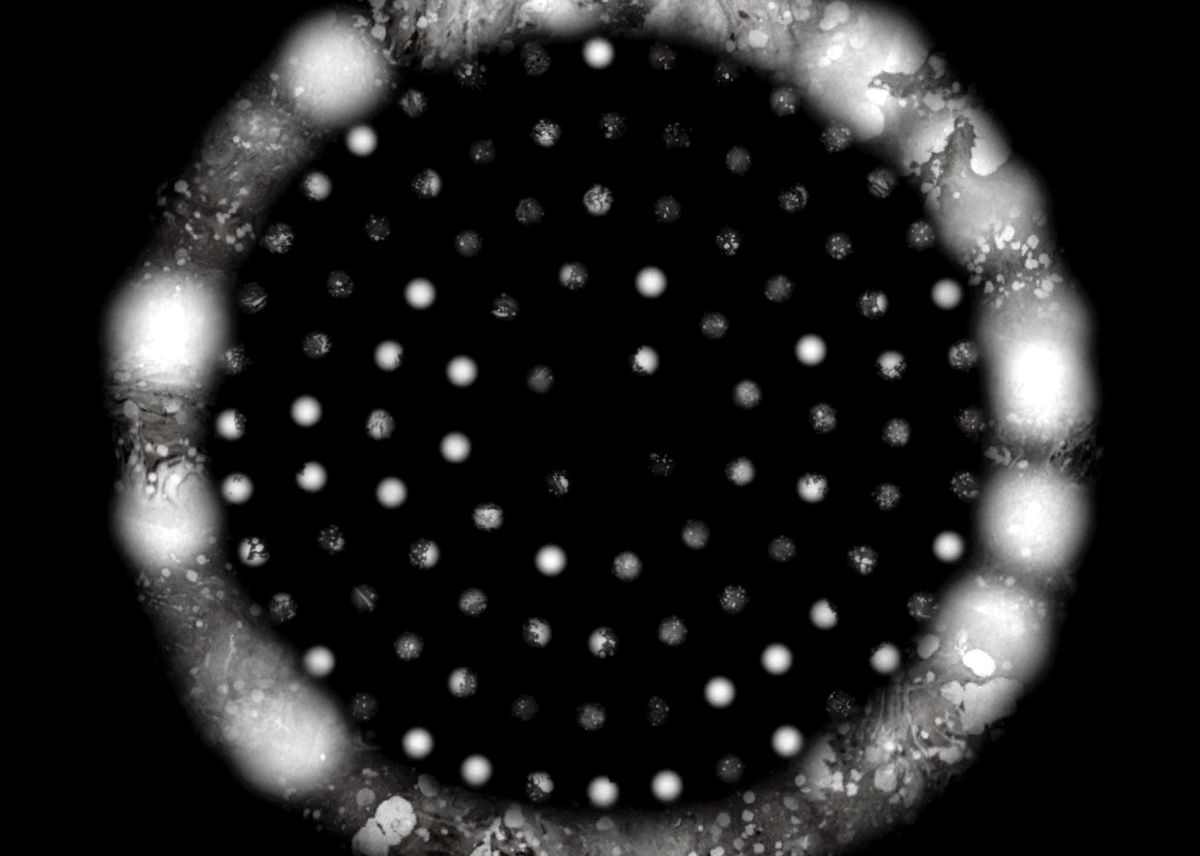

The interactive multimedia composition Annular Genealogy, created in collaboration with Kiyomitsu Odai,

explores the use of orchestrated feedback as

an organizational theme. The

composition is performed by two players, each of whom

use a separate digital interface to create and interact

with the parallel iterative processing of compositional

data in both the aural and visual domains. In the aural

domain, music is generated using a stochastic process

that sequences tones mapped to a psycho-acoustically

linear Bark scale. The timbre of these tones and the

parameters determining their sequencing are determined

from various inputs, including especially the 16-channel

output of the previous pass fed back into the system via

a set of microphones. In the visual domain, animated,

real-time graphics are generated using custom software

to create an iterative visual feedback loop. The composition brings various

layers of feedback into a cohesive compositional

experience. These feedback layers are interconnected,

but can be broadly categorized as physical feedback,

internal or digital feedback, interconnected or

networked feedback, and performative feedback. An article describing our approach was presented at

ICMC’12.

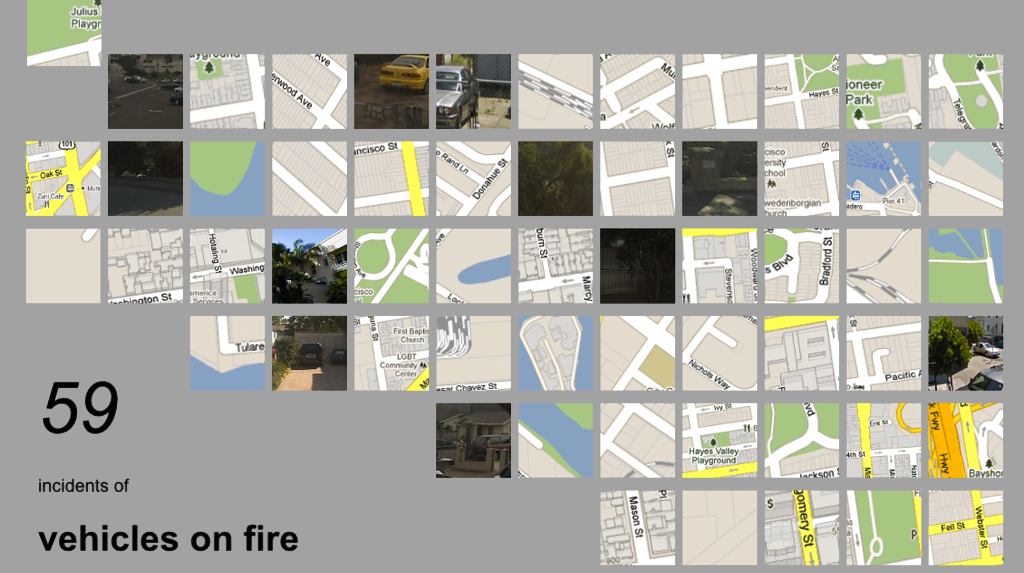

Infrequent crimes is a data visualization piece that iterates through a list of unusual, uncommon crimes that occurred in San Francisco within the last year.

The squares accompanying each type of crime indicate an incident and furthermore display the location of that crime. The longitude and latitude of each incident, gathered from San Francisco police reports, is indicated either by a map tile or an image taken from Google Maps Street View.

Infrequent Crimes was part of the Super Santa Barbara 2011 exhibition at Contemporary Arts Forum in Santa Barbara, California, curated by Warren Schultheis. It was also featured at Spread: California Conceptualism, Then and Now at in SOMArts in San Francisco, CA, curated by OFF Space. Excerpts can be seen here.

Coming or going is a fixed video piece created with custom software in which drums are used to trigger the creation of procedurally generated geometric abstractions and the application of various visual effects. This project was most recently shown as part of Idea Chain, the Expressive Arts Exhibition, at Koç University Incubation Center in Istanbul, Turkey (2015); and was featured in AVANT-AZ at Exploded View Microcinema in Tucson, Arizona (2014), curated by David Sherman and Rebecca Barten. An early version of the software was used in a live performance with live coder Charlie Roberts at Something for Everyone, the Media Arts and Technology End-of-the-Year festival at University of California, Santa Barbara (2009).

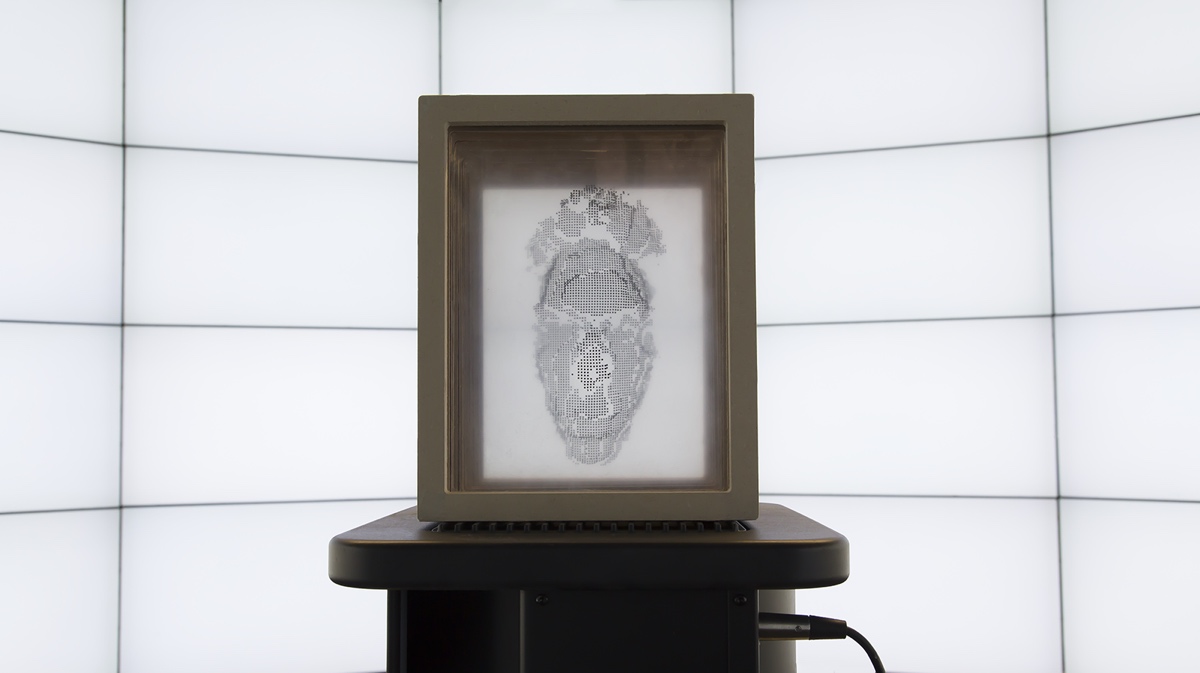

The New Dunites is a site-specific media art project comprised of research, an augmented reality application, and an interactive multimedia installation. The project investigates a culturally unique and biologically diverse geographic site, the Guadalupe-Nipomo Coastal Dunes. Buried under these dunes are the ruins of the set of DeMille’s 1923 epic film, “Ten Commandments.” The project employed Ground Penetration Radar (GPR) technology to gather the data on this artifact of film history. In an attempt to articulate and mediate the interaction between humans and this special environment, the New Dunites project, led by Andres Burbano, Solen Kiratli DiCicco, and Danny Bazo in collaboration with Angus Forbes and Andrés Barragán, constructed an ecology of interfaces (from mobile device apps to gallery installations) that made use this data as their primary input. The artistic outputs include interactive data visualization, physical data scultpure, a novel temporal isosurface reconstructions of original film, and video documentation describing the data collection process and introducing the project as a work of media archaeology. The project was selected for an “Incentivo Produccion” award by Vida 13.0 and has been presented at the Todaiji Culture Center in Nara, Japan. A write-up of the project was published at ACM MM’12.

ISEA’11

Poznan Biennale

National Theatre Poitiers

Ford Gallery

Beall Center for Art

Davis Museum and Cultural Center

Lawrence Hall of Science

Project page

Cell Tango is a dynamically evolving collection of cellphone photographs contributed by the general public. The images and accompanying descriptive categories are projected large scale in the gallery and dynamically change as the image database grows over the course of the installation. The project is a collaboration with artist George Legrady and (in later iterations) composer Christopher Jette. Cell Tango was featured at the Inauguration of the National Theatre Poitiers, organized by Hubertus von Amelunxen, Poitiers, France (2008); as a featured installation at Ford Gallery, Eastern Michigan University, Ypsilanti (2008-2009), curated by Sarah Smarch; as part of “Scalable Relations,” curated by Christiane Paul, Beall Center for Art & Technology, UC Irvine (2009), as a featured installation at the Davis Museum and Cultural Center, Wellesley College, Wellesley (2009), curated by Jim Olson. Sonification was added and premiered at the Lawrence Hall of Science, UC Berkeley (2010); and featured at the Poznan Biennale, Poland (2010). More information about the project can be found here.

Data Flow consists of three Dynamically generated data visualizations that map members’ interactions with Corporate Executive Board’s web portal. The three visualizatons are situated on the “Feature Wall” from the 22nd to 24th floor of the Corporate Executive Board Corporation, Arlington, Virginia. The three visualizations of Data Flow each consist of three horizontally linked screens to feature animations in 4080 x 768 pixel resolution. The flow of information consists of the following: CEB IT produces appropriately formatted data which is retrieved every ten minutes by the Data Flow project server and stored in a local database, where it is kept for 24 hours. The project server also retrieves longitude and latitude for location data and discards any data that does not correlate with the requirements of the visualizations. The server stored data is then forwarded to three visualization computers that each process the received data according to their individual animation requirements. Data Flow was developed in collaboration with George Legrady in 2009, commissioned by Gensler Design. More information about the project can be found here and here.